OMILIA CONVERSATIONAL VOICE

Automate your contact center with Conversational AI to provide a phone service your customers will love.

With rising operational costs, increasing agent churn and service quality that leaves customers dissatisfied, contact centers are turning to AI to improve the customer experience. With Omilia Conversational AI for voice channels, gone are the days of long, confusing IVR menus, ineffective call routing and frustrated customers waiting in queues.

Powered by Generative AI, Omilia automates more than 90% of customer interactions, to increase first-call resolution and deliver a human-like engaging personalized experience.

Automate an array of service queries with AI designed for complex voice interactions, without needing human agents to intervene, and guarantee your contact center provides the exceptional service customers demand.

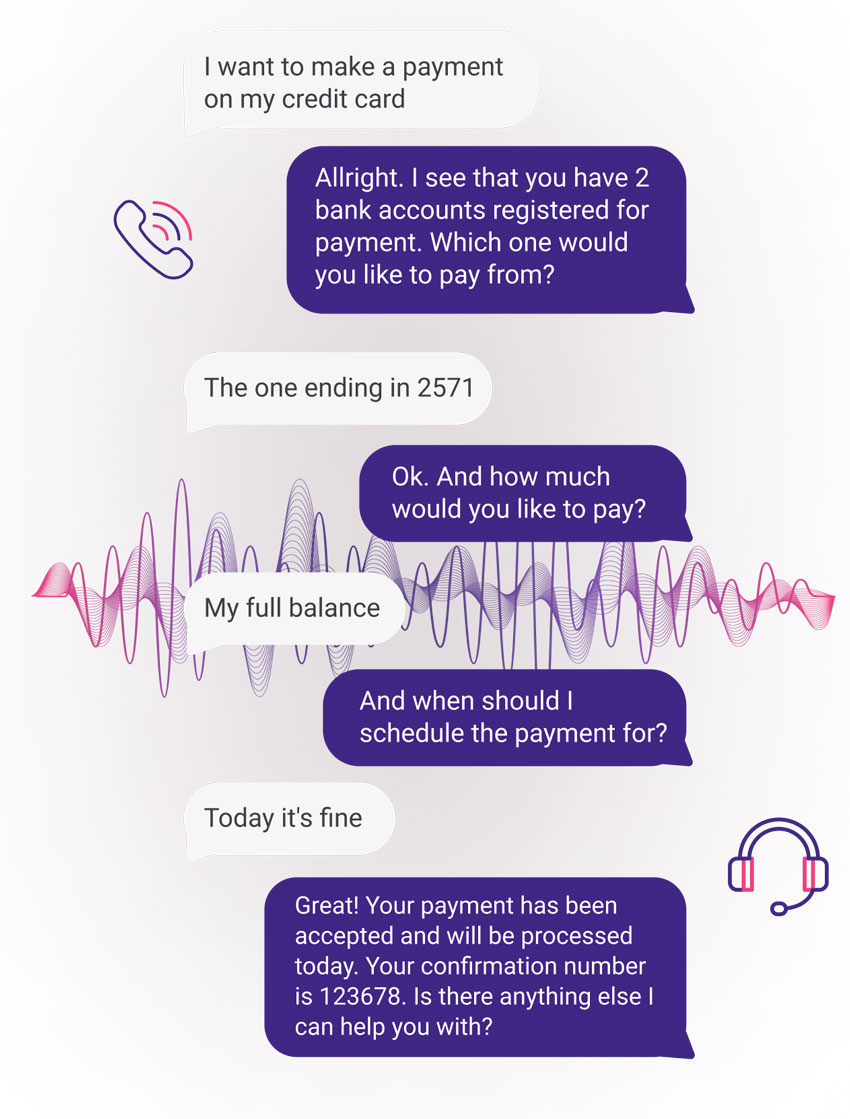

Drive Engaging Conversations

With out-of-the-box, industry-specific AI models that are pre-trained on millions of customer interactions our bots provide 96% intent understanding accuracy on day one. They understand context, clarify responses and ask follow up questions to provide an engaging experience for customers.

Reduce Wait Times

The voice bots can recognize multiple action items, or intents, in a single call and can logically execute the tasks. With self-service that efficiently handles high volumes of routine calls, you can reduce wait times and increase first call resolution.

Personalize Customer Engagement

Dynamic and context-aware voice bots understand sentiment, past interactions and preferences to adapt in real-time during the call. They tailor their response to create a personalized and engaging experience as unique as your customers are.

The highest cost in a contact center is undoubtedly the human capital. With a solution tailored to your needs, and voice bots that reflect your brand’s voice, you can cost-effectively deliver a level of service that sets you apart from your competition.

Reduce Operational Costs

Omilia Conversational Voice provides fast and precise categorization of customer intents and call routing reducing the cost per interaction, improving first call resolution and deflection, and minimizing the average handle time.

Increase Self-Service Automation

Customers speak in natural language to the virtual agents and even if customers switch topics or interrupt during the dialog, the conversational AI platform can understand what they need and successfully contain the call, so you can free up agents to deal with more challenging tasks.

Provide Seamless Interactions

The AI-powered voice bots can detect disambiguation or incomplete responses from customers and ask for clarification or suggest a few options based on the context, to confirm the most appropriate action and still provide a seamless human-like conversation.

01/06/2024

Reviewer Role:

Engineering - Other

Company Size:

10B - 30B USD

Industry:

Banking Industry

Overall, a very positive experience. Vendor is very receptive of their customer feedback. Very good customer service.

Read More24/07/2024

Reviewer Role:

IT Services

Company Size:

10B - 30B USD

Industry:

Banking Industry

Every employee of Omilia I came into contact with is proud of their company and wants every engagement to be a successful one.

Read More05/07/2024

Reviewer Role:

IT

Company Size:

10B - 30B USD

Industry:

Energy and Utilities Industry

Our overall experience with implementing with the Omilia project team was excellent. They quickly understood our requirements and also offered feedback and potential room for improvement.

Read More05/07/2024

Reviewer Role:

IT

Company Size:

10B - 30B USD

Industry:

Energy and Utilities Industry

Collaboration with the project team and their ability to deliver on complex use case requirements such as multi intent transaction automation, alpha-numeric, and multi-language, allowed us to achieve deployment with faster speed to market.

Read More05/07/2024

Reviewer Role:

IT

Company Size:

1B - 3B USD

Industry:

Banking Industry

Stable network that works great for the self-service of our client's needs. Seamless workflow when changes are needed to update intents

Read More11/07/2024

Reviewer Role:

IT

Company Size:

10B - 30B USD

Industry:

Travel and Hospitality Industry

Omilia has gone above and beyond to meet our needs. Our deployment was quick, and the support was excellent.

Read More11/07/2024

Reviewer Role:

IT

Company Size:

10B - 30B USD

Industry:

Travel and Hospitality Industry

We have been very impressed with Omilia's IVR solution and people. Everything from interacting with Omilia staff to requirements gathering and implementation has been exceptional. This IVR was selected due to its AI abilities and so far it has delivered what was promised.

Read More11/07/2024

Reviewer Role:

Engineering - Other

Company Size:

10B - 30B USD

Industry:

Banking Industry

Omilia has been very responsive to issues and questions we have had through the entire process.

Read More15/07/2024

Reviewer Role:

Operations

Company Size:

250M - 500M USD

Industry:

Energy and Utilities Industry

Omilia teams were able to deliver on complex use cases quickly, helping us meet our delivery schedule. They continue to collaborate closely with our teams on IVR optimizations.

Read More15/07/2024

Reviewer Role:

Customer Service and Support

Company Size:

10B - 30B USD

Industry:

Manufacturing Industry

Excellent experience. Leveraged Omilia to deliver an improved customer experience.

Read More16/07/2024

Reviewer Role:

Operations

Company Size:

250M - 500M USD

Industry:

Travel and Hospitality Industry

Omilia has been a great partner from the first POC test to market test.

Read More17/07/2024

Reviewer Role:

Product Management

Company Size:

<50M USD

Industry:

Energy and Utilities Industry

Very responsive and knowledgeable, easy to work with, work ethics are high

Read More18/07/2024

Reviewer Role:

IT

Company Size:

1B - 3B USD

Industry:

Transportation Industry

The implementation team from Omilia was amazing and made our rollout simple. Omilia is a true partner and focuses on how to further our business needs.

Read More18/07/2024

Reviewer Role:

Customer Service and Support

Company Size:

1B - 3B USD

Industry:

Banking Industry

They are always supportive and deliver great service and assistance when needed

Read More19/07/2024

Reviewer Role:

Engineering - Other

Company Size:

250M - 500M USD

Industry:

Banking Industry

Ability to deliver complex use cases with top quality; agility and top speed. Hundreds of out of the box functionalities in the contact center space.

Read More19/07/2024

Reviewer Role:

Product Management

Company Size:

<50M USD

Industry:

Banking Industry

Omilia has been a great partner for us to date, and has been particularly flexible/accomodating to our unique needs. We have a strong POV/vision of vision for IVR both from customer/business and E2E tech stack perspectives, of which Omilia has been fully aligned and supporting.

Read More26/07/2024

Reviewer Role:

Customer Service and Support

Company Size:

500M - 1B USD

Industry:

Energy and Utilities Industry

The team has been very attentive to our needs and expectations and has been fairly consistent with communication. The uptime of the system and updates has been satisfactory.

Read More29/07/2024

Reviewer Role:

Customer Service and Support

Company Size:

500M - 1B USD

Industry:

Banking Industry

This is an exclusive package of functions that is based on the cloud. NLU corresponders perfectly to the Greek Language. The cooperation is at a very high level and efficient and with deliverables as agreed.

Read More29/07/2024

Reviewer Role:

Customer Service and Support

Company Size:

3B - 10B USD

Industry:

Healthcare and Biotech Industry

The Omilia team is compiled of experts. They care about the functionality of their product and the success of their clients. I felt valued as a customer.

Read More29/07/2024

Reviewer Role:

Software Development

Company Size:

3B - 10B USD

Industry:

Banking Industry

Overall, my experience with Omilia has been very good. It's rare to engage with a vendor where it feels like a true partnership, and they care as much about the customer experience as we do.

Read More01/08/2024

Reviewer Role:

Sales and Business Development

Company Size:

250M - 500M USD

Industry:

Telecommunication Industry

Our ICT Sales team resells OCP as part of ICT projects solutions. This is a great experience because: 1) OCP is user-friendly and allows quick deployment, 2) OCP is well accepted from the part of our customers, 3) Omilia is a well committed partner.

Read More05/08/2024

Reviewer Role:

Engineering - Other

Company Size:

30B + USD

Industry:

Banking Industry

Omilia has been an outstanding partner, providing excellent resources to support our growth and system utilization. Their product team aligns with our vision and has delivered numerous enhancements to meet our specific needs.

Read More28/08/2024

Reviewer Role:

IT

Company Size:

10B - 30B USD

Industry:

Energy and Utilities Industry

Omilia has been a great partner. They have delivered amazing AI solutions that fits our needs. The team that has been working with us are phenomenal and best at what they do. They are customer focused and always come up with innovative solutions that are very helpful to our business. Omilia team is very adjustable to the time and needs of the customer.

Read More